Key Takeaways

- A dynamic network optimization framework leveraging machine learning enhances Industrial IoT performance by optimizing resource allocation and fault management.

- The methodology employs deep reinforcement learning alongside decision tree-based models for real-time adaptation and improved fault prediction.

- Continuous learning from new data allows the system to adapt and maintain efficiency in changing industrial environments.

Dynamic Network Optimization Framework

A proposed methodology for Dynamic Network Optimization aims to improve Industrial IoT (IIoT) operations by integrating advanced machine learning techniques and adapting to real-time network conditions. The process starts with systematic data collection from IIoT devices, where sensor data is aggregated, filtered, and normalized, addressing issues like noise and missing values through statistical and machine learning methods.

The methodology then focuses on key feature extraction, identifying essential parameters like temperature and operational history that signal potential faults. A hybrid model architecture combines Deep Reinforcement Learning (DRL) for real-time adjustment with ensemble methods such as Random Forest (RF) and Gradient Boosting Machines (GBM) for enhanced prediction accuracy. This integration allows the DRL component to respond to changing network dynamics, while RF and GBM capture intricate data relationships to detect anomalies.

The framework’s performance is evaluated using standard metrics like accuracy, precision, and latency, with hyperparameter tuning to ensure peak efficiency. Once validated, the model is deployed in real-time IIoT environments, continuously monitoring conditions, predicting failures, and optimizing maintenance schedules.

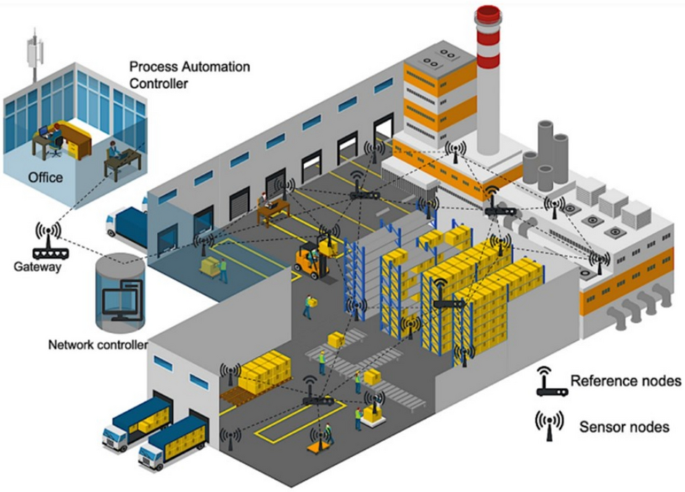

Central to this framework is the architecture that incorporates sensor and reference nodes across the factory floor, facilitating continuous data collection from various machinery. This data flows through gateways to a centralized network controller, enabling resource allocation and predictive analysis. The system architecture is structured around the representation of nodes and their connectivity while leveraging graph theory for efficient data representation and optimization.

Algorithmic processes are designed to optimize network parameters utilizing deep Q-learning in reinforcement learning, alongside RF for real-time fault classification and GBM for predicting rare failures. Feedback from both RF and GBM is integrated into the DRL system to refine IIoT operations continually.

The architecture also focuses on linking defined reward structures with tangible industrial Key Performance Indicators (KPIs). By correlating penalty terms with maintenance costs and downtime, the methodology aims to enhance energy efficiency and extend network life. The optimization equations encapsulate the relationships between data transmission rates, energy consumption, and maintenance metrics, promoting effective resource utilization.

Overall, this innovative framework leverages the capabilities of machine learning, enabling intelligent wireless resource management and adaptive control for future IIoT applications. The integration of continuous learning ensures that the system remains responsive and effective in dynamic and heterogeneous industrial settings.

The content above is a summary. For more details, see the source article.