Key Takeaways

- Spiking Neural Networks (SNNs) offer enhanced power efficiency and real-time processing for edge and IoT applications, but face design challenges.

- Research is underway to improve network architecture discovery through various techniques, including NAS and innovative algorithms.

- Understanding and optimizing SNNs requires addressing hardware constraints and developing specialized performance metrics for effective evaluation.

Innovative Approaches in Spiking Neural Networks

Spiking Neural Networks (SNNs) are paving a new path in artificial intelligence, particularly beneficial for power efficiency and real-time processing at the edge and within the Internet of Things (IoT). Despite their promise, designing optimal SNN architectures poses significant challenges due to the complex relationship between software models and hardware limitations. A recent comprehensive survey by Kama Svoboda and Tosiron Adegbija from The University of Arizona examines these challenges and emphasizes the importance of co-designing hardware and software solutions.

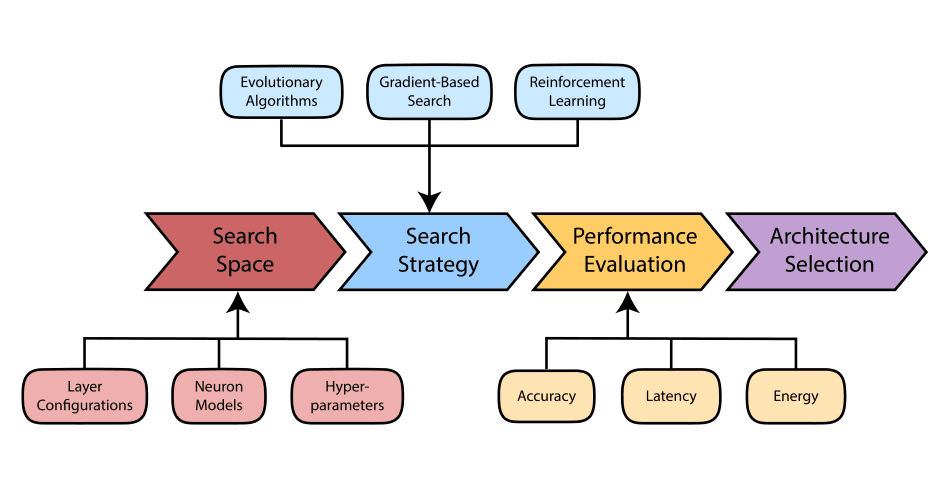

The survey highlights a range of emerging techniques for discovering optimal network architectures tailored to various applications. Notable methods include Reinforcement Learning, Evolutionary Algorithms, and Gradient-Based Neural Architecture Search (NAS). Additional approaches such as One-Shot NAS and Zero-Shot NAS have been developed to streamline architecture searching, with bandit algorithms enhancing efficiency in exploring potential designs.

Beyond traditional methods, scientists are investigating innovative strategies, including label-free NAS that utilizes unlabeled datasets and curriculum learning which incrementally increases the complexity of the search process. These new strategies not only diversify architecture options but also focus on identifying optimal building blocks, allowing for a more effective exploration of the design space.

A critical aspect of this research is accurately estimating network performance prior to full training, often through the use of proxy tasks or predictions of network weights. This is particularly relevant given the computational costs associated with various NAS methods. The emerging understanding of SNNs indicates their unique operational principles, distinguishing them from conventional artificial neural networks.

Within this context, researchers assess different neuron models, such as the Leaky Integrate-and-Fire (LIF) model and the Hodgkin-Huxley model, noting trade-offs in efficiency and biological authenticity. Additionally, various spike encoding schemes, including rate-based and temporal encoding, significantly influence performance, emphasizing the need for a thorough evaluation of architectural designs.

The survey critiques conventional gradient-based optimization techniques that may not translate effectively to SNNs due to their discrete spike nature. Therefore, new performance metrics are being developed to assess spike timing precision and firing rates, which are crucial for understanding spiking network behaviors.

Moreover, aligning NAS methods with neuromorphic hardware constraints—such as limited precision and asynchronous computational capabilities—is essential for producing compatible architectures. Current strategies predominantly seek a single, universal architecture; however, the future lies in tailoring solutions to specific hardware platforms. The research calls for the advancement of co-optimization strategies and hardware-aware designs, aiming to leverage the full potential of spiking networks and further promote the field of neuromorphic computing.

The content above is a summary. For more details, see the source article.