Key Takeaways

- The transformer model has revolutionized AI since its introduction in 2017, paving the way for LLMs and VLMs.

- LLMs like Meta’s Llama 3.2 pose significant challenges for efficient deployment on legacy hardware due to their resource demands.

- LLM inference involves complex multi-phase runtime systems, making deployment significantly more challenging than traditional AI networks.

The Impact of Transformers on AI

Since the seminal paper “Attention Is All You Need” in 2017, the transformer architecture has dramatically influenced artificial intelligence, serving as the basis for Large Language Models (LLMs) and Video Language Models (VLMs). This shift culminated in the public release of ChatGPT in November 2022, marking the integration of transformer technology into mainstream AI applications, ranging from conversational agents to medical research.

However, the large size of LLMs poses considerable constraints, particularly on edge devices and older hardware designs that were developed prior to this innovation. In contrast to earlier state-of-the-art models like CNNs (Convolutional Neural Networks) and RNNs (Recurrent Neural Networks), which had around 85 million parameters, LLMs commonly start at 1 billion parameters and can go up to 8 billion or more. This increase makes it challenging to implement cost-effective solutions for loading these models on hardware tailored for smaller networks.

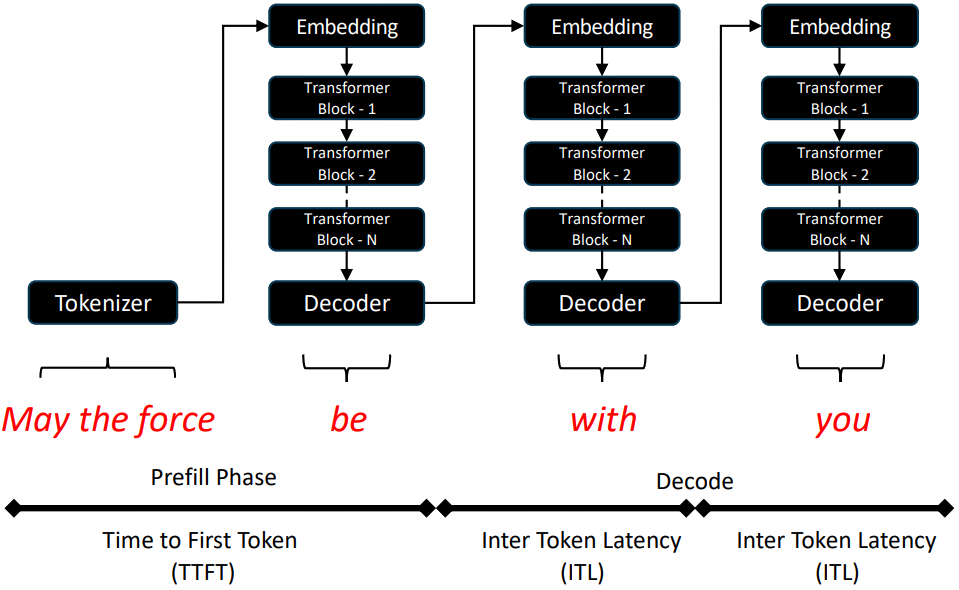

Meta’s Llama 3.2 exemplifies these advancements, featuring a billion parameters and enhanced text and multimodal capabilities. It incorporates improvements aimed at enterprise and edge device deployment. The complexity of LLMs is further evidenced during their operational phases: the prefill phase involves a compute-intensive process where all tokens are processed simultaneously, while the decode phase focuses on generating responses sequentially. The distinct computational demands between these phases complicate effective hardware usage.

Understanding LLM Inference Flow

The inference process for LLMs begins when a user inputs a prompt. This input is translated into a “token” using a tokenizer before undergoing two primary processing phases: prefill and decode. In the prefill phase, the entire user prompt is processed through transformer blocks, and attention vectors are cached to speed up subsequent generations. Conversely, in the decode phase, the model generates responses token by token, utilizing cached values, which significantly optimizes runtime.

Despite the efficiency gained during the decode phase, the differing computational needs of prefill and decode create challenges for memory access and power usage. The prefill stage requires extensive calculation across all tokens, while decoding focuses solely on one token at a time using the stored cache of attention values. This means creating a balanced solution suitable for both phases is essential for effective LLM deployment.

LLM Inference Runtime Complexity

LLM architectures introduce a multi-phase inference runtime system, which consists of five distinct stages: prefill, decode, inactive, follow-up prefill, and retired phases. This complexity sharpens the difficulties associated with deploying LLMs compared to conventional AI networks that operate with simpler two-phase systems.

-

Prefill Phase: This involves tokenizing user input and generating initial attention vectors, which is heavy on computation.

-

Decode Phase: The model generates one token at a time using cached data, optimizing the process significantly compared to the prefill phase.

-

Inactive Phase: The model retains sequences in memory while awaiting new input, which can lead to resource bottlenecks.

-

Follow-up Prefill: This is triggered by new user input added to ongoing conversations, extending the cached context.

-

Retired Phase: In this final stage, completed sequences are cleared from active memory, freeing up resources and scheduling capabilities for new requests.

The intricacies of managing these phases, alongside the resource-heavy nature of attention caches, complicate LLM deployment, necessitating advancements in processing platforms to meet these emerging needs efficiently. Addressing these challenges will require innovations in data representation, computational throughput, and multi-core architecture to support LLM demands effectively.

The content above is a summary. For more details, see the source article.