Key Takeaways

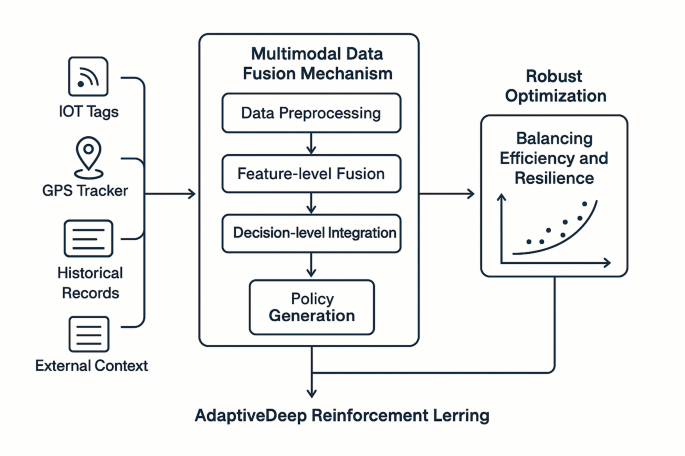

- A multimodal data fusion framework is proposed to enhance decision-making in global logistics by integrating diverse data sources.

- An adaptive deep reinforcement learning (DRL) model optimizes logistics scheduling by utilizing continuous interactions and multimodal input.

- The framework supports real-time situational awareness and efficient resource allocation within complex logistics networks while managing uncertainties.

Introduction to the Multimodal Data Fusion Framework

Effective decision-making in global logistics networks necessitates a comprehensive understanding derived from numerous data sources, including physical assets and digital systems. The proposed multimodal data fusion framework seeks to integrate varying data streams to create a unified representation of logistics states. This framework tackles significant challenges such as temporal misalignment and semantic inconsistencies.

The architecture features a hierarchical structure with three stages: data preprocessing, feature-level fusion, and decision-level integration. In the preprocessing stage, unique techniques normalize data streams. The feature-level fusion then utilizes modality-specific encoders and cross-modal attention mechanisms to generate a common latent representation, ensuring data relevance and quality.

Addressing the alignment of asynchronous data streams, the framework aggregates high-frequency data and interpolates low-frequency data using a multi-rate fusion approach. To manage uncertainty, evidential deep learning principles are incorporated, allowing for effective decision-making even when data sources are unreliable.

Adaptive Deep Reinforcement Learning Model

Building on the fusion framework, an adaptive deep reinforcement learning model is designed to optimize logistics scheduling decisions through continuous interactions with the logistics environment. This model employs a modified Soft Actor-Critic framework, integrated with multimodal inputs, to accommodate the dynamic logistics landscape.

The state space encapsulates the logistics network condition, represented as heterogeneous tensors comprising various data types. Action space is carefully defined to cover all scheduling decisions while using hierarchical decomposition to manage complexity.

The reward function considers multiple objectives relevant to logistics performance, such as cost, time, reliability, and sustainability, dynamically adjusting based on logistics priorities.

Implementation and Policy Generation

The trained multimodal deep reinforcement learning model is employed to generate adaptive scheduling policies for logistics operations. A hierarchical decision-making approach facilitates the breakdown of complex logistics decisions into manageable sub-decisions, improving efficiency.

The resource allocation component matches transportation assets with logistics tasks based on demand patterns. Route planning leverages a two-tiered approach to determine high-level corridors and specific paths, adjusting dynamically to real-time conditions.

Lastly, task scheduling prioritizes operational efficiency while meeting deadlines and resource constraints. The integrated framework continuously adapts to changing logistics conditions, allowing for responsive and effective decision-making across global logistics networks.

The content above is a summary. For more details, see the source article.